Part 1: parsing data

Well, I recently review a manuscript on predicting the so-called "protein-protein interactions" with a convolutional neural network (CNN). The quality of the paper was exceptionally low, the authors are from different institutions that they are really unlikely to collaborate with each other. It is highly likely that the paper was from a paper mill. Nonetheless, as I have never done formal research on biological system, the draft still helped to me know something in the field. I was really curious on the validity of their results and if I could achieve better predictions, so here it is.

What are they doing here

They are basically doing a sequence based binary classification. Some protein pairs are divided into two categories, interacting paris and non-interacting pairs. As far as I understand, "interacting" means the two proteins can bind with each other, while "non-interacting" ones will repulse each other (if they are close enough).

This problem sounds really trivial to me. You just have to process two strings (one-letter amino acid sequence of a protein is just a string) and classify them in two two group. I felt I should be able to get it done pretty quickly, so I started looking into it.

I have attached the original notebook to this site and can be downloaded here, so you can play with it yourself.

The dataset

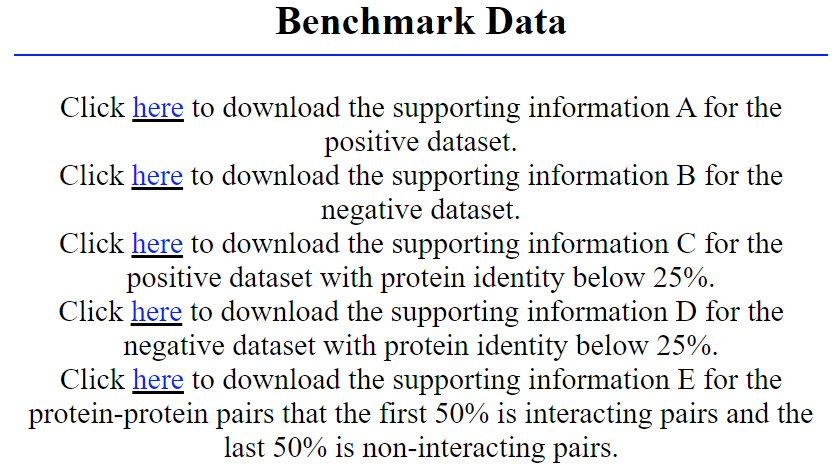

They were dealing with several datasets from different sources, but focused on one dataset published by a group from SJTU.

The two files used are supporting information A and B, for interacting and non-interacting pairs, respectively. As the names suggest, these files are SI for a certain paper. However, I have no idea who decided a MS Word document could be a good choice in this case.

The two files consist of a header

Online support information A

There are 36,630 protein-protein pairs from total 9476 proteins, and the first column is protein ID from HPRD, the second column is the other protein ID and the two proteins constitute the positive Protein-protein interaction.

Index Protein_1_ID protein_2_ID

and blocks like the following

1 NP_663777.1 NP_001233.1

>NP_663777.1

MESSKKMDSPGALQTNPPLKLHTDRSAGTPVFVPEQGGYKEKFVKTVEDKYKCEKCHLVLCSPKQTECGHRFCESCMAALLSSSSPKCTACQESIVKDKVFKDNCCKREILALQIYCRNESRGCAEQLMLGHLLVHLKNDCHFEELPCVRPDCKEKVLRKDLRDHVEKACKYREATCSHCKSQVPMIALQKHEDTDCPCVVVSCPHKCSVQTLLRSELSAHLSECVNAPSTCSFKRYGCVFQGTNQQIKAHEASSAVQHVNLLKEWSNSLEKKVSLLQNESVEKNKSIQSLHNQICSFEIEIERQKEMLRNNESKILHLQRVIDSQAEKLKELDKEIRPFRQNWEEADSMKSSVESLQNRVTELESVDKSAGQVARNTGLLESQLSRHDQMLSVHDIRLADMDLRFQVLETASYNGVLIWKIRDYKRRKQEAVMGKTLSLYSQPFYTGYFGYKMCARVYLNGDGMGKGTHLSLFFVIMRGEYDALLPWPFKQKVTLMLMDQGSSRRHLGDAFKPDPNSSSFKKPTGEMNIASGCPVFVAQTVLENGTYIKDDTIFIKVIVDTSDLPDP

>NP_001233.1

MARPHPWWLCVLGTLVGLSATPAPKSCPERHYWAQGKLCCQMCEPGTFLVKDCDQHRKAAQCDPCIPGVSFSPDHHTRPHCESCRHCNSGLLVRNCTITANAECACRNGWQCRDKECTECDPLPNPSLTARSSQALSPHPQPTHLPYVSEMLEARTAGHMQTLADFRQLPARTLSTHWPPQRSLCSSDFIRILVIFSGMFLVFTLAGALFLHQRRKYRSNKGESPVEPAEPCRYSCPREEEGSTIPIQEDYRKPEPACSP

In side each block, the first line is the index for the pair, and their

RefSeq IDs. Then the next line will start with a > symbol and

followed by the RefSeq ID of the first protein. The next line is the amino acid

sequence of the protein in the one letter code. Similarly, the next 2 lines are

for protein 2.

Process the Word documents

Now I need find a programming way to deal with these two files. There are some Python packages for parsing MS Word documents, but I did not have good luck with it, possibly because these files are in the Word 2003 format.

After some googling, I decided to give antiword a shot. You can easily install it via the package manager from your OS.

# download the MS Word file

wget http://www.csbio.sjtu.edu.cn/bioinf/LR_PPI/Supp-A.doc

# well, I use debian and ubuntu anyway...

sudo apt update && apt install antiword

# convert!

antiword Supp-A.doc > Supp-A.txt

A very smooth process. It worked like a charm. The resulting Supp-A.txt is

just a plaintext version of the Word document. The only difference is that the

soft wrap in the Word document is now a hard wrap, meaning that the super long

sequences will be break into multiple lines. This makes parsing the file a

little bit more tedious. Before, you know that the sequence is always stored in

one line.

Online support information A

There are 36,630 protein-protein pairs from total 9476 proteins, and the

first column is protein ID from HPRD, the second column is the other

protein ID and the two proteins constitute the positive Protein-protein

interaction.

Index Protein_1_ID protein_2_ID

1 NP_663777.1 NP_001233.1

>NP_663777.1

MESSKKMDSPGALQTNPPLKLHTDRSAGTPVFVPEQGGYKEKFVKTVEDKYKCEKCHLVLCSPKQTECGHRFCESC

MAALLSSSSPKCTACQESIVKDKVFKDNCCKREILALQIYCRNESRGCAEQLMLGHLLVHLKNDCHFEELPCVRPD

CKEKVLRKDLRDHVEKACKYREATCSHCKSQVPMIALQKHEDTDCPCVVVSCPHKCSVQTLLRSELSAHLSECVNA

PSTCSFKRYGCVFQGTNQQIKAHEASSAVQHVNLLKEWSNSLEKKVSLLQNESVEKNKSIQSLHNQICSFEIEIER

QKEMLRNNESKILHLQRVIDSQAEKLKELDKEIRPFRQNWEEADSMKSSVESLQNRVTELESVDKSAGQVARNTGL

LESQLSRHDQMLSVHDIRLADMDLRFQVLETASYNGVLIWKIRDYKRRKQEAVMGKTLSLYSQPFYTGYFGYKMCA

RVYLNGDGMGKGTHLSLFFVIMRGEYDALLPWPFKQKVTLMLMDQGSSRRHLGDAFKPDPNSSSFKKPTGEMNIAS

GCPVFVAQTVLENGTYIKDDTIFIKVIVDTSDLPDP

>NP_001233.1

MARPHPWWLCVLGTLVGLSATPAPKSCPERHYWAQGKLCCQMCEPGTFLVKDCDQHRKAAQCDPCIPGVSFSPDHH

TRPHCESCRHCNSGLLVRNCTITANAECACRNGWQCRDKECTECDPLPNPSLTARSSQALSPHPQPTHLPYVSEML

EARTAGHMQTLADFRQLPARTLSTHWPPQRSLCSSDFIRILVIFSGMFLVFTLAGALFLHQRRKYRSNKGESPVEP

AEPCRYSCPREEEGSTIPIQEDYRKPEPACSP

Anyway, once we have a plaintext file, reading it is always easy. After some tweaking, I came up with this way of reading the file.

Let us load necessary packages first.

from copy import deepcopy

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import torch

from torch.utils.data import DataLoader, TensorDataset, random_split

def read_sequence():

digits = {}

for i in range(9):

digits[str(i + 1)] = ""

files = ["Supp-A.txt", "Supp-B.txt"]

types = [1, 0]

skip_lines = [9, 8]

pairs = []

proteins = {}

interactions = []

for _, file in enumerate(files):

count = 0

eof=False

with open(file, "r") as doc:

print(file)

for i in range(skip_lines[_]):

line = doc.readline()

while True:

ids = line.split()[1:]

pairs.append(ids)

# ">ID1" line

line = doc.readline()

for i, id in enumerate(ids):

# read sequence for the first protein

sequence = ""

while True:

line = doc.readline()

# break when 1. ">ID2" or next pair or EOF is read

if not line or line[0] == ">" or line[0] in digits:

if id not in proteins:

proteins[id] = sequence

if not line:

eof=True

elif line[0] == ">":

count += 1

break

sequence += line.rstrip("\n")

# print(count, id, sequence)

if eof:

break

if eof:

interactions += [types[_]] * count

break

return proteins, pairs, interactions

proteins, pairs, interactions = read_sequence()

Supp-A.txt Supp-B.txt

It is a little tricky to make a script to read the script fully automatically. I guess I will add some comments over here later. The resulting sequences need to be further processed before we can use them.

One common thing people in this field do is to exclude all sequences below 50 amino acids and remove all sequences with non-canonical amino acids, for example, in this paper.

# use the dictionary `to_remove` to store any protein ID that follows in the two criteria above

to_remove = {}

for k, v in proteins.items():

if len(v) < 50:

to_remove[k] = ""

continue

# I have checked the sequences, the only "non-canonical" is "U" which is selenocysteine

for l in v:

if l == "U":

to_remove[k] = ""

Then if the keys in to_remove show up in any pair, we remove the pair and

their interaction value from the lists.

for i in range(len(pairs) - 1, -1, -1):

id1, id2 = pairs[i]

if id1 in to_remove or id2 in to_remove:

del pairs[i], interactions[i]

for k in to_remove.keys():

proteins.pop(k)

Then I would like to save the processed data as csv files, though I never use them really...

ppi = {}

for i, (pair, value) in enumerate(zip(pairs, interactions)):

ppi[i] = {"protein 1": pair[0], "protein 2": pair[1], "interaction": value}

protein_df = pd.DataFrame(proteins, index=[0]).transpose()

protein_df.columns = ["sequence"]

interaction_df = pd.DataFrame(ppi).transpose()

protein_df.to_csv("proteins.csv")

interaction_df.to_csv("ppi.csv")

Next, I would like to find a percentile to truncate long sequences. The reason to do it is that vast majority of the sequences are very short (a few hundred), but there are some very long outliers (for example, > 30K). If you allocate a tensor based on the longest sequence, the drawback is obvious. The tensor will be very sparse. As a result, it is wise to cut these long sequences.

Loop through all sequences and get the length of the sequence at 95% percentile.

seq_len = []

for v in proteins.values():

seq_len.append(len(v))

length = int(np.percentile(seq_len, np.arange(0, 100, 5))[-1])

Next, convert the strings of all sequences to a tensor of integers based on ASC II. Note that here we have the tensor of sequences, not pairs yet.

sequences = torch.zeros((len(proteins), length), dtype=int)

for i, v in enumerate(proteins.values()):

for j in range(len(v) if length > len(v) else length):

sequences[i, j] = ord(v[j]) - 64

Then we can build the tensors for the pairs. protein1 and protein2 are the

sequences of the first and second proteins in each pair. targets is the tensor

of labels for whether the pair is interacting or not. I am doing a manual

one-hot encoder here. Of course you can ask torch to do it. Not sure why I

wanted to do it manually.

Here I build another helper dictionary called protein_idx just to quickly

locate the index of each protein in sequences. ($O(1)$ for a hashtable and

$O(n)$ for a list)

protein_idx = {}

count = 0

for k in proteins.keys():

protein_idx[k] = count

count += 1

targets = torch.zeros((len(interactions), 2), dtype=torch.float)

protein1 = torch.empty((len(interactions), length), dtype=torch.int64)

protein2 = torch.empty((len(interactions), length), dtype=torch.int64)

for i, (pair, value) in enumerate(zip(pairs, interactions)):

targets[i, value] = 1

protein1[i] = sequences[protein_idx[pair[0]]]

protein2[i] = sequences[protein_idx[pair[1]]]

We have got all the tensors we need, so we can easily assemble a dataset with

torch's TensorDataset class.

dataset = TensorDataset(protein1, protein2, targets)

After this, we will start to build our network. Please check out the section part of this post over here.

Machine Learning Neural Network Coding Python Torch